2012 Colloquia

Spring 2012

Let A and B be function algebras on locally compact Hausdorff spaces X and Y, respectively. We give the characterization of surjective isometries from A onto B. We also investigate isometries between linear subspaces of C_0(X) and C_0(Y).

Friday, 11 May 2012

10:10 a.m. in Math 108

11:00 a.m. Refreshments in Math Lounge 109

How different structures of a given space interact with each other is a basic question. The Mazur-Ulam theorems states that the metric structure of a normed linear space encodes the linear structure. A version for metric groups is exhibited and applications are also given.

Thursday, 10 May 2012

10:10 a.m. in Math 103

11:00 a.m. Refreshments in Math Lounge 109

Doctoral Dissertation Defense

Wednesday, May 9, 2012

10:10 am in Math 108

Positional games are played by two players who alternately attempt to gain control of winning configurations. In traditional positional games, the players alternately place pieces in open positions on a game board. In this defense we explore a variation on this game where players alternately make a hop move instead. During a hop a player moves one of his own pieces and replaces it with one of his opponent’s. We explore the Hop-Positional game played on two classes of boards: $AG(2,q)$ and $PG(2,q)$. In particular we explore how a counter-intuitive strategy leads to an optimal result for the second player on many boards. Once we have establish our results on these two classes of boards we define a new type of board called a nested board, in which the points of a board are replace with copies of another board. We will discuss two types of games on the class of nested boards. First the traditional positional game in which players alternately place pieces in open positions, and second the Hop-positional game where players alternately hop on the nested board. We divide the nested boards of interest into four classes based on the structure of the component boards. We will demonstrate threshold values for the size of the nested board in each class in order to guarantee the second player has a drawing strategy in each game.

Dissertation Committee:

Jenny McNulty, Chair (Mathematical Sciences),

Mark Kayll (Mathematical Sciences),

George McRae (Mathematical Sciences),

Mike Rosulek (Computer Science),

Nikolaus Vonessen (Mathematical Sciences)

This talk outlines a situation related to modeling competing yeast populations in continuous environments, where a susceptible population can outcompete an infectious population. The specific system involves the killer-virus infected yeast and the uninfected yeast interactions. The killer virus infects yeast and gives it the ability to produce a toxin which kills uninfected yeast cells. It is natural to suspect that the upkeep of a virus and the production of toxin detracts from the resources available for growth. Cell density in a chemostat is determined by its growth rate constant and the dilution rate constant of the system. If the dilution rate term is large compared to the growth rate term, then the yeast population is "washed out" from the chemostat. The possibility of a dilution rate being high enough to washout the killer yeast, but not the susceptible (non-killer) yeast, is explored using the mathematical model, and a region in a parameter space where the "washout" of the infected yeast can happen is determined using experimental data.

Monday, 7 May 2012

3:10 p.m. in Math 311

4:00 p.m. Refreshments in Math Lounge 109

Doctoral Dissertation Defense

Tuesday, May 1, 2012

4:10 pm in Math 103

With technological, research, and theoretical advancements, the amount of data being generated for analysis is growing rapidly. In many cases, the number of subjects may be small, but the number of measurements taken on each subject may be very large. Consider, for example, two groups of patients. The subjects in one group are diseased and the other subjects are not. Over 9,000 relative fluorescent unit (RFU) signals, measures of the presence and abundance of proteins, are collected in a microarray or protoarray from each subject. Typically these kind of data show marked skewness (departure from normality) which invalidates the multivariate normalbased theory. What is more, due to the cost involved, only a limited number of subjects have to be included in the study. Therefore, the standard large-sample asymptotic theory cannot be applied. It is of interest to determine if there are any differences in RFU signals between the two groups, and more importantly, if there are any RFU signal effects which depend on the presence or absence of the disease. If such an interaction is detected, further research is warranted to identify any of these biological signals, commonly known as biomarkers.

To address these types of phenomena, we present inferential procedures in two-factor repeated measures multivariate analysis of variance (RM-MANOVA) models where the covariance structure is unknown and the number of measurements per subject tends to infinity. Both in the univariate case, in which the number of dimensions or response variables is one, and the multivariate case, in which there are several response variables, different sums of squares and cross product matrices are proposed to compensate for the unknown structure of the covariance matrix and unbalanced group sizes. Based on the new matrices, we present some multivariate test statistics, deriving their asymptotic distributions under fairly general conditions. We then use simulation results to assess the performance of the tests, and we analyze a real data set to demonstrate their applicability.

Dissertation Committee:

Solomon W. Harrar, Chair (Mathematical Sciences),

Johnathan M. Bardsley (Mathematical Sciences),

Jon Graham (Mathematical Sciences),

Jesse V. Johnson (Computer Science)

David Patterson (Mathematical Sciences)

In the dance of statistical academia, theory is often a step or two behind necessity. Booming advancements in technology and research over recent decades have created a need for statistical theory to address the real-life phenomena from which data so often arise. Traditionally, whether with proper justification or out of the need and desire for mathematical tractability, many assumptions are imposed in statistical methods. For instance, independence among subjects is the theoretical bedrock of the classical Central Limit Theorem first proposed over a century ago. Since that time much has changed. The aim of my research is to present inferential procedures for two-factor repeated measurements analysis of variance, both when the number of response variables is one or when it is several, and when the number of measurements taken on each subject is very large (tends to infinity). To date, there is some research relinquishing assumptions regarding the covariance structure among such data, and there are many results dealing with dependent measurements within each subject. However, to the best of our knowledge, no work has been done in both of these areas with the added relaxation of the assumption on the underlying distributional assumption. Thus far it has been assumed that the data arise from a normal or multivariate normal distribution, a condition which is dismissed in this research seeking robustness.

To those ends, this talk introduces robust, formal tests of significance along with their asymptotic distributions, and real data are analyzed to illustrate the applicability of these new methods. As an introduction, the focus will be the univariate case, that is, when the number of response variables is one. New methods are proposed which allow us to more tractably address the asymptotic nature of the test statistics. As a result, some background on the direct sum, Kronecker product, and vec matrix operators will be also be presented. A simulation discussion and an example analyzing Parkinson’s disease will be included, altogether gearing up for the presentation of the dissertation defense.

Monday, 30 April 2012

3:10 p.m. in Math 103

4:00 p.m. Refreshments in Math Lounge 109

Doctoral Dissertation Defense

Thursday, April 26, 2012

3:10 pm in Math 108

A regular lattice of spatially dependent binary observations is often modeled using the autologistic model. It is well known that likelihood-based inference methods cannot be employed in the usual way to estimate the parameters of the autologistic model due to the intractability of the normalizing constant for the corresponding joint likelihood. Two popular and vastly contrasting approaches to parameter estimation for the autologistic model are maximum pseudolikelihood (PL) and Markov Chain Monte Carlo Maximum Likelihood (MCMCML). Two newer and less understood approaches are maximum generalized pseudolikelihood (GPL) and maximum block generalized pseudolikelihood (BGPL). Both of these newer methods represent varying degrees of compromise between maximum pseudolikelihood and MCMCML.

In this defense we will establish the strong consistency of the estimators resulting from both GPL and BGPL, and we will additionally present generalizations of GPL and BGPL for use in the space-time domain. The relative performances of all four estimation methods from a large-scale autologistic model simulation study, as well as from a small-scale spatio-temporal autologistic model simulation study, will be presented. Finally, all four estimation methods will be implemented to describe the spread of fire occurrence in a temperate grasslands ecosystem of Oregon and Washington using the autologistic model.

Dissertation Committee:

Jon Graham, Chair (Mathematical Sciences),

Johnathan M. Bardsley (Mathematical Sciences),

Solomon W. Harrar (Mathematical Sciences),

Jesse V. Johnson (Computer Science),

David Patterson (Mathematical Sciences)

For a binary response variable the logistic model is commonly implemented to describe the probability of success as a function of one or more covariates. As long as the response variables are independent, such a paradigm is appropriate. However, when binary responses on a regular lattice are observed in space, spatial dependencies typically exist and the logistic model is rendered invalid. The autologistic model is an intuitive extension of the logistic model that accommodates such a lack of spatial independence. Unfortunately, the normalizing constant for its joint likelihood function is usually intractable, and, therefore, methods other than maximum likelihood are needed to estimate the parameters of the autologistic model.

In this talk we will review the logistic model, introduce the autologistic model, and present four methods of parameter estimation for the autologistic model, including pseudolikelihood, Markov chain Monte Carlo maximum likelihood, generalized pseudolikelihood, and block generalized pseudolikelihood. A fire occurrence data set from Oregon and Washington will be used throughout the presentation as a means to motivate and illustrate the aforementioned concepts. As this talk is a prelude to a forthcoming defense, various topics comprising the defense presentation will also be previewed.

Monday, 23 April 2012

3:10 p.m. in Math 103

4:00 p.m. Refreshments in Math Lounge 109

In this talk we explore variations on Tic-Tac-Toe. We will consider positional games played using a new type of move called a hop. A hop involves two parts: move and replace. in a hop the positions occupied by both players will change: one will move a piece to a new position and one will gain a new piece in play. We play hop-positional games on a traditional Tic-Tac-Toe board, on the finite planes AG(2,q) and PG(2,q) as well as on a new class of boards which we call nested boards. The game outcomes for several classes of boards are determined using weight functions to define player strategies.

Monday, 16 April 2012

3:10 p.m. in Math 103

4:00 p.m. Refreshments in Math Lounge 109

I will present a biologically-based mathematical model that accounts for several features of human sleep and demonstrate how particular features depend on interactions between a circadian pacemaker and a sleep homeostat. The model is made up of regions of cells that interact with each other to cause transitions between sleep and wake as well as between REM and NREM sleep. Analysis of the mathematical mechanisms in the model yields insights into potential biological mechanisms underlying sleep and sleep disorders including stress-induced insomnia and fatal familial insomnia.;

Monday, 26 March 2012

3:10 p.m. in Math 103

4:00 p.m. Refreshments in Math Lounge 109

Monday, 19 March 2012

3:10 p.m. in Math 103

4:00 p.m. Refreshments in Math Lounge

Postponed until fall

The construct of creativity became one of the focal areas of my studies 14 years ago. This focus on creativity began in a domain specific manner within mathematics- the literature which by and large relied on nostalgic accounts from eminent samples, subsequently expanded into disciplines like psychology, psychometrics and sociology that studied the construct more rigorously. In this colloquium talk, we will unpack the construct of "creativity" with the aid of different confluence theories of creativity from psychology and sociology to understand how creativity functions at the individual, institutional and societal levels (with cultural limitations). Contemporary findings from the literature in psychology, in addition to examples from the History of Science will be used to unpack the various constructs involved in the study of creativity, with the purpose of giving a bird’s eye view of how ideas survive, mutate and propagate in academic scholarship and in general. Some implications for "measuring" creativity and fostering talent development are discussed.

Monday, 12 March 2012

3:10 p.m. in Math 103

4:00 p.m. Refreshments in Math Lounge 109

Note: This is a shorter version of the keynote given at the 2011 Annual UMURC organized by the Davidson Honors College on 04.15.2011.

The process of learning to teach mathematics is multifaceted and includes a number of domains known to influence the instructional practices teachers employ and, consequently, the learning opportunities of their students. My research focuses on two domains: Mathematical Knowledge for Teaching (MKT) (Ball, Hill, & Bass, 2005) and conceptions about mathematics teaching and learning. Both of these domains have become critical aspects of mathematics teacher education; however, little is known about how they evolve over time. This presentation reports on an ongoing inquiry into the mathematical development of elementary teachers and factors affecting the progression of MKT and conceptions aligned with effective teaching practices.

I will begin by discussing a collaborative effort to construct a comprehensive set of instruments for longitudinally studying the evolution of elementary teachers’ conceptions (here, conceptions represents three central and interrelated subconstructs: beliefs, attitudes, and dispositions). Unlike existing measures, the Mathematics Experiences and Conceptions Surveys (MECS) are being designed to correspond with significant benchmarks in teacher preparation. Instrument validity and reliability are being established through data collected at four institutions over two years. Initial results from these analyses and plans for continued instrumentation will be shared.

Preliminary findings will then be presented from data collected at two universities during the fall semester of 2011. Preservice elementary teachers enrolled in mathematics methods courses completed pre- and post-administrations of the MECS and MKT Measures developed by the Learning Mathematics for Teaching Project (Hill, Schilling, & Ball, 2004). ANOVA results for aggregate data showed significant gains in MKT and attitudes towards teaching mathematics over the duration of one semester. To examine these changes, regression models were developed in search of explanatory variables. Additional analyses explored potential relationships among MKT, attitudes, beliefs, dispositions, and experiences afforded by methods courses and related fieldwork. Initial observations and plans for future research will be discussed.

Ball, D. L., Hill, H. C., & Bass, H. (2005). Knowing mathematics for teaching: Who knows mathematics well enough to teach third grade and how can we decide? American Educator, Fall, 14-46.

Hill, H. C., Schilling, S. G., & Ball, D. L. (2004). Developing measures of teachers' mathematics knowledge for teaching. The Elementary School Journal, 105(1), 11-30.

Thursday, 1 March 2012

4:10 p.m. in Math 103

3:30 p.m. Refreshments in Math Lounge 109

Pedagogical content knowledge is an important construct in teacher education (Schulman, 1987). This category of teacher knowledge extends beyond prerequisite knowledge of subject matter to a dimension of subject matter knowledge that is used for teaching. Ball and Hill (2005) have developed a measure of pedagogical content knowledge in mathematics and have shown that teachers’ possession of such knowledge is positively related to student achievement. Not surprisingly, many recent educational reform efforts in mathematics have been directed towards the development of pedagogical content knowledge in teachers.

In this presentation I will share with you the results of my ongoing research associated with the Middle School Mathematics Specialist program at the University of Wisconsin-Madison. The program is aimed at the development of pedagogical content knowledge for current middle school mathematics teachers. In particular, my research investigates the role of curricula in the development of pedagogical content knowledge of participating teachers in the program. Ultimately, I seek to identify and characterize the important aspects of educational activities employed in the Middle School Mathematics Specialist program that are linked to measurable gains in teacher pedagogical content knowledge and student learning.

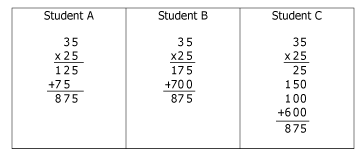

Content Knowledge

Solve: 35 x 25 =

Pedagogical Content Knowledge

Imagine that you are working with your class on multiplying large numbers. Among your students’ papers you notice that some have displayed their work in the following ways:

Which of these students would you judge to be using a method that could be used to multiply any two whole numbers? (Ball and Hill, 2005)

Thursday, 23 February 2012

4:10 p.m. in Math 103

3:30 p.m. Refreshments in Math Lounge 109

A November 1996 proof of the Pythagorean theorem a2+b2=c2, by Frank Burk in The College Mathematics Journal (page 409) led to my efforts (with Jim Hirstein) to find proofs to other geometry theorems using Burk's method. In this talk, among other results, I will show a new proof of Ptolemy's theorem and inequality. I will also discuss a proof of the Erdős-Mordell inequality (1937) for triangles, and some of our conjectures for convex quadrilaterals and polygons.

Monday, 13 February 2012

3:10 p.m. in Math 103

4:00 p.m. Refreshments in Math Lounge 109

In this talk I will argue that certain (distributed) algorithms may be designed by carrying out a brute force search, using massive parallelism, and a model checker. To demonstrate the approach, I will report on an attempt to construct a (two-processor, single-use) reliable TEST&SET bit using fewer than 7 component TEST&SET bits, of which at most 1 may be faulty. A program has been designed to systematically generate program texts of a specific form. Each of these texts serves as input to a model checker to determine whether that text implements a reliable TEST&SET bit. Due to the large amount of possible program texts, we use parallelism to speed up the search for a solution.

The problem of constructing a reliable TEST&SET bit with fewer than 7 component TEST&SET bits is motivated by a construction due to Afek, Greenberg, Merritt, and Taubenfeld, who have shown that 7 component TEST&SET bits, of which at most 1 may be faulty, are sufficient to implement a reliable TEST&SET bit. Afek et. al. have not proved that 7 component TEST&SET bits are also necessary to implement a reliable TEST&SET bit. Thus, if the approach is successful, the construction of Afek, Greenberg, Merritt, and Taubenfeld has been improved.

I will also argue that the advocated approach is applicable to comparing programs, all designed to achieve a similar objective.

The search program ran on a cluster (with about 60 processors), good for prototyping, at SKC. Thereafter, the program ran for several months at the Royal Mountain Supercomputer Centers (RMSC) in Butte, Montana. (It used almost 300 processors.) Recently, RMSC has shut down all its compute nodes, because of budgetary constraints. I am now actively looking for new resources to run my program.

Monday, 6 February 2012

3:10 p.m. in Math 103

4:00 p.m. Refreshments in Math Lounge 109

Fall 2012

We consider linear inverse problems of form Az = u, z ∈ Z, u ∈ U. Z and U are Banach latices, A is a linear bounded operator. We provide a technique for estimating the error of an approximate solution under some a priori assumptions on the exact solution. The problem of calculating the error estimate is reduced to several linear programming problems.

In the second part of the talk, an application of these techniques to ice sheet bed elevation measurements is considered. We are interested in identifying the ice thickness from the velocity data, weather observations and satellite measurements. Data on ice thickness along certain lines are also available. The problem is to identify the thickness between these lines using the governing equations and some a priori regularity assumptions.

Monday, 10 December 2012

3:10 p.m. in Math 211

4:00 p.m. Refreshments in Math Lounge 109

Cancelled

Monday, 26 November 2012

3:10 p.m. in Math 103

4:00 p.m. Refreshments in Math Lounge 109

This study is an examination of the evolution of one novice's teachers advice network on Twitter. The study follows the novice teacher over a nine month period as she transitions from student teacher to full time teacher. The study applies methods from the field of Social Network Analysis to analyze her network. The results are then compared to other studies of face-to-face novice teacher networks.

Monday, 19 November 2012

3:10 p.m. in Math 103

4:00 p.m. Refreshments in Math Lounge 109

Methods of supervised classification seek to segment a data set into classes based on a priori knowledge. Classification techniques are used on a wide range of scientific problems. For example, ecologists classify aerial images in order to determine how landscapes change over time, while the U.S. Post Office uses classification techniques for handwriting recognition. Other, non-imaging examples include spam detection systems for email and patient diagnosis in medicine.

Supervised classification techniques require the user to provide the set of classes in the data set, as well as a training set for each class. The training set consists of a set of measurements for which its class is known. A classifier is built from the training data, which is then applied to new observations.

The support vector machine (SVM) is a well-known method for supervised classification and is well documented throughout the literature. In constructing an SVM classifier, it is possible to formulate it in such a way that a quadratic minimization problem arises, with both equality and bound constraints.

An introduction to classification, with a focus on image data sets, will be presented, followed by a derivation of the SVM classifier that yields the above mentioned optimization problem. Image data sets will be presented to visually demonstrate the SVM classifier and to compare performance of various constrained quadratic solvers, two of which the presenter developed.

Monday, 5 November 2012

3:10 p.m. in Math 103

4:00 p.m. Refreshments in Math Lounge 109

In the usual Category of Graphs, the graphs allow only one edge to be incident to any two vertices, not necessarily distinct. Also the usual graph morphisms must map edges to edges and vertices to vertices while preserving incidence. We refer to these morphisms as strict morphisms. We relax the condition on the graphs allowing any number of edges to be incident to any two vertices, as well as relaxing the condition on graph morphisms by allowing edges to be mapped to vertices, provided that incidence is still preserved. We call this broader graph category The Category of Conceptual Graphs, and define four other graph categories created by combinations of restrictions of the graph morphisms as well as restrictions on the allowed graphs.

In 1927 Emmy Noether proved three important theorems concerning the behavior of morphisms between groups. These three theorems are called Noether Isomorphism Theorems, and they have been found to apply to morphisms for many mathematical structures. The first of the Noether Isomorphism Theorems when generalized is called the Fundamental Morphism Theorem, and the Noether Isomorphism Theorems follow as corollaries to the Fundamental Morphism Theorem. We investigate how the Fundamental Morphism Theorem applies to these categories of graphs.

Monday, 29 October 2012

3:10 p.m. in Math 103

4:00 p.m. Refreshments in Math Lounge 109

Suppose a wandering philosopher regularly visits every major city in an area, but she gets bored if she travels on the same path, and therefore never wants to use a road twice. How many times can she travel to every city before she is forced to reuse a road? In graph theory, this problem is finding as many edge-disjoint Hamiltonian cycles as possible.

Recently, in the case where each vertex has large degree (each city has many roads leading out of it), Christofides, Kühn, and Osthus proved you could find a surprisingly large number of Hamiltonian cycles. They used one of the most powerful tools in graph theory, originated by Szemerédi, known as the Regularity Lemma. However, there are some drawbacks to using the Regularity Lemma, and recently there has been a push to develop tools and proof methods that replace it.

We developed a partition theorem similar in flavor to the Regularity Lemma that is easier to use. Using our partition theorem, we were able to give a shorter proof of the Christofides, Kühn, Osthus result that applies in more cases. Proving our partition theorem will allow us to talk about another of the most important ideas in graph theory: the probabilistic method.

Monday, 15 October 2012

3:10 p.m. in Math 103

4:00 p.m. Refreshments in Math Lounge 109

Photonic Doppler Velocimetry (PDV) is a measurement of the Doppler shift of laser light reflecting off of a moving surface, and it is usually used to determine moving surface positions under extreme temperatures, pressures, and energies. The actual measurements are voltages on a digital oscilloscope, which must be mathematically processed to back out the changing position of the surface. Position is usually parameterized by local polynomial fitting, and it is important to have some estimates of the "error bars" of the fit. Seemingly unrelated, the Peano Kernel Theorem is a century-old result giving a special kernel formula for numerical quadrature schemes, but it turns out that it can be adapted to give error estimates for local polynomial fits, which makes it relevant for PDV analysis. The proof of the generalized result uses basic Fourier analysis, but finding the right setting in which the "easy" proof actually holds requires the theory of Laplace transformable tempered distributions. We will describe the experimental application driving this work, the proof, and the abstract harmonic analysis needed to formulate the generalized Peano Kernel Theorem.

Monday, 8 October 2012

3:10 p.m. in Math 103

4:00 p.m. Refreshments in Math Lounge 109

Piezoelectric, magnetic and shape memory alloy (SMA) materials offer unique capabilities for energy harvesting and reduced energy requirements in aerospace, aeronautic, automotive, industrial and biomedical applications. For example, the capability of lead zirconate titanate (PZT) to convert electrical to mechanical energy, and conversely, poises it for energy harvesting whereas the large work densities of SMA are being utilized in applications including efficient jet engine design. However, all of these materials exhibit creep, rate-dependent hysteresis, and constitutive nonlinearities that must be incorporated in models and designs to achieve their full potential. Furthermore, models and control designs must be constructed in a manner that incorporates parameter and model uncertainties and permits predictions with quantified uncertainties. In this presentation, we will discuss Bayesian techniques to quantify uncertainty in nonlinear distributed models arising in the context of smart systems. We will also discuss the role of these techniques for subsequent robust control design.

Monday, 1 October 2012

3:10 p.m. in Math 103

4:00 p.m. Refreshments in Math Lounge 109

A statistical analysis of the uncertainties of modeling, with the nonlinear models arising in engineering and science especially in mind, is becoming routine due to computational methods such as Markov chain Monte Carlo (MCMC) or Bootstrap. However, 'large' models, either in terms of CPU times or the dimension of the state, are still a challenge. We present some recent ideas how to minimize the CPU times of the sampling methods as well as to achieve low memory approximations for high dimensional problems. The primary targets here are weather prediction and climate models, studied in collaboration with FMI (Finnish Meteorological Institute) and ECMWF (European Centre for Medium-Range Weather Forecasts, UK).

Monday, 24 September 2012

3:10 p.m. in Math 103

4:00 p.m. Refreshments in Math Lounge 109

Monday, 17 September 2012

3:10 p.m. in Math 103

4:00 p.m. Refreshments in Math Lounge 109

Geosciences Colloquium:

4:10 p.m. - CHCB 304